Abstract

1This paper addresses a complex question: Can educational research be both rigorous and relevant? The first eight years of the first decade of the 21st Century was a time when federal support for educational research in the USA emphasized rigor above most other concerns, and the last two years may mark the beginning of a shift to more emphasis on impact. The most desirable situation would be a balance between rigor and impact. Educational designers, teachers, and other practitioners would especially stand to benefit from such a balance because of the likelihood that it will enhance the impact of educational research. Educational design research is proposed as having enormous potential with respect to striking an appropriate balance between rigor and relevance in the service of the educational needs of learners, teachers, designers, and society at large.

If we really want to improve the relation between research, policy, and practice in education, we need an approach in which technical questions about education can be addressed in close connection with normative, educational, and political questions about what is educationally desirable. The extent to which a government not only allows the research field to raise this set of questions, but actively supports and encourages researchers to go beyond simplistic questions about “what works” may well be an indication of the degree to which a society can be called democratic.

(Biesta, 2007, pp. 21-22)

The Pendulum Swings: Rigor and Relevance

2On January 24, 2001, just four days after being inaugurated as the 43rd President of the United States of America, George W. Bush sought to establish himself as the “Education President” by announcing that he would push for legislation that would leave “no child behind” in educational achievement in the country. Maintaining that there had been no improvement in education for 30 years despite a near doubling of public spending per child, President Bush signed the “No Child Left Behind (NCLB) Act” into law a year later. The most salient components of NCLB are high standards, ubiquitous testing, and increased school-level accountability for student achievement (Simpson, Lacava, & Graner, 2004). While some hailed NCLB as the key to school reform (Slavin, 2003a; Subotnik & Walberg, 2006), others have questioned its impact on children and teachers in the nation’s poorest schools (Apple, 2007; Cochran-Smith & Lytle, 2006; Meier & Wood, 2004).

Another component of the Bush administration’s initiative to improve public education in the USA was the establishment of the Institute for Education Sciences (IES), which replaced the Office of Educational Research and Improvement (OERI) as the primary source of research funding from the U.S. Department of Education. Replacing the words “Educational Research and Improvement” with the words “Education Sciences” signaled a new emphasis on scientific rigor in education research. The first director of IES, Grover J. Whitehurst, relentlessly promoted what his agency called “scientifically-based research [that] involves the application of rigorous, systematic, and objective procedures to obtain reliable and valid knowledge relevant to education activities and programs” (U.S. Department of Education, n.d., italics added by the author).

The use of the term “rigorous” in referring to research procedures [methods] has been interpreted differently by proponents (Feuer, Towne, & Shavelson, 2002; Slavin, 2004, 2008) and opponents (Chatterji, 2004; Olson, 2004; Schoenfeld, 2006) of IES’ stated and tacit priorities. For example, Slavin (2002) argued that “Once we have dozens or hundreds of randomized or carefully matched experiments going on each year on all aspects of educational practice, we will begin to make steady, irreversible progress” (p. 19). Expressing a strong faith in the power of the kind of rigor he perceived in the randomized controlled trials used in medical research, Slavin (2003b) maintained that there are only five questions that need to be addressed to determine the validity of an educational research study:

- Is there a control group?

- Are the control and experimental groups assigned randomly?

- If it is a matched study, are the groups extremely similar?

- Is the sample size large enough?

- Are the results statistically significant?

In contrast, Olson (2004) criticized Slavin’s (2003a) enthusiasm for modeling educational research on the randomized controlled trials (RCTs) used by medical researchers. Among the problems Olson identified were that double blind experiments are impossible in education, that implementation variance in educational contexts often severely reduces treatment differences, that causal agents are under-specified in education, and that the goals, beliefs, and intentions of students and teachers affect treatments in ways that are often unpredictable.

One of the most contentious initiatives of IES during the Bush administration was the establishment of the What Works Clearinghouse (WWC) which was “designed to provide teachers and others with a reliable and proven source of scientific evidence regarding effective and scientifically supported educational methods” (Simpson et al., 2004, p. 69). Noting the WWC’s obvious bias toward experimental studies, Chatterji (2004) wrote:

In the rush to emphasize generalized causal inferences on educational initiatives, the developers of the WWC standards end up endorsing a single research method to the exclusion of others. In doing so, they not only overlook established knowledge and theory on sound evaluation design; they also ignore critical realities about social, organizational, and policy environments in which education programs and interventions reside. (p. 3).

Ironically, the WWC personnel have been able to identify very few educational programs and practices that have the evidence that is sufficiently rigorous according to their own criteria to warrant their inclusion in the What Works database. For example, a review of over 1,300 studies that examined the effect of teacher professional development on student achievement found that only nine met WWC standards for rigorous evidence (Yoon, Duncan, Lee, Scarloss, & Shapley, 2007).

Olson (2004) and others (cf. Chatterji, 2004; St.Pierre, 2006) have argued that the complexity of phenomena related to teaching and learning inevitably render fruitless attempts to conduct controlled experiments, especially RCTs, in education. Schoenfeld (2009a) concluded that the WWC is “seriously flawed” (p. 185), not least because of its failure to address important differences in the assessment measures used in the experimental studies examining the effectiveness of various educational programs.

Fortunately, the pendulum that had swung so far to the right toward supposed rigor under the Bush administration may have begun to swing to the left toward much-needed relevance, although not far and fast enough for this author. In February 2010, John Q. Easton, the newly-appointed head of IES in the administration of President Barrack Obama, stated the following priorities for the agency:

- Make our research more relevant and useable. [italics added by author]

- Shift from a model of “dissemination” to a model of “facilitation.”

- Create stronger links between research, development and innovation.

- Build the capacity of states and school districts to use longitudinal data, conduct research and evaluate their programs.

- Develop a greater understanding of schools as learning organizations.

The emphasis on relevance is overdue. RCTs seek to establish immutable laws related to teaching and learning, but any that are found either exist at such a high level of abstraction that they cannot be applied to specific situations or are “too narrow for direct application in practice” (Schoenfeld, 2009b, p. 5). Cronbach (1975) clarified this dilemma 35 years ago, although many in the rigor camp of the rigor versus relevance debate obviously fail to remember or intentionally ignore his compelling words of caution:

Our troubles do not arise because human events are in principle unlawful; man and his creations are part of the natural world. The trouble, as I see it, is that we cannot store up generalizations and constructs for ultimate assembly into a network. (Cronbach, 1975, p. 123, italics in original)

Similarly, Glass (2008) wrote: “I do not believe that [research studies aimed at shaping policy], mired as they are in debates between research methods experts, have any determinative value in shaping the current nature of public education or its future” (p. 285). Glass went on to describe the weakness of research in other fields, including medicine, in settling policy disputes. One only has to consider the “scientific evidence” currently marshaled for and against vaccinating young children against diseases such as chicken pox, measles, mumps, polio, and rubella. Is it any wonder that sometimes vociferous debates still rage about a wide range of educational programs and practices such as:

- Whole Language Approaches to Teaching Reading (Allington, 2002)

- Constructivist Pedagogical Methods (Tobias & Duffy, 2009)

- Teaching Evolution in Science Education (Skehan & Nelson, 2000)

Questioning the Value of the Medical Research Model

3The conviction that educational research would be greatly improved by emulating medical research, especially the widespread adoption of RCTs, remains strong (Slavin, 2008; also see the Best Evidence Encyclopedia at: http://www.bestevidence.org/), but it must be questioned. Interestingly, even some medical research experts claim that the complexity inherent in medical and health contexts usually renders the conclusions of RCTs difficult to apply to individual cases. For example, Gawande (2009) wrote:

I have been trying for some time to understand the source of our greatest difficulties and stresses in medicine. It is not money or government or the threat of malpractice lawsuits or insurance company hassles – although they all play their role. It is the complexity that science has dropped upon us and the enormous strains we are encountering in making good on its promise.

(Gawande, 2009, pp. 10-11)

The complex realities of medical research should give pause to those who make such recommendations (Dixon-Woods, Agarwal, Jones, Young, & Sutton, 2005; Goodman, 2002; Sanson-Fisher, Bonevski, Green, & D'Este, 2007). Consider the field of oncology that deals with the study and treatment of cancer. Until the early 1970s, there were no oncologists as distinct medical practitioners and people diagnosed with cancer generally were sent surgeons who tried to cut the cancer out of them. But over the last 40 years, cancer researchers and oncologists as well as other cancer specialists have collaborated in ambitious research and development efforts that have yielded a dramatic improvement in cancer survivorship around the world (Pardee & Stein, 2009). Randomized controlled trials have played a major role in these advances, but they are not the full story (Mukherjee, 2010).

Of course, it is obvious to anyone who has had cancer or has lost a loved one to the disease that cancer research still has a long way to go. Writing in the New Yorker magazine, Gladwell (2010) described how there are two primary schools of researchers seeking for cures for cancer. “The first school, that of ‘rational design,’ believes in starting with the disease and working backward – designing a customized solution based on the characteristics of the problem” (Gladwell, 2010, p. 71) and careful consideration of the best available theory. In my mind, this approach is akin to the kind of educational design research (van den Akker, Gravemeijer, McKenney, & Nieveen, 2006; Kelly, Lesh, & Baek, 2008) that more, if not most, educational researchers should pursue because it is much more relevant than other approaches.

According to Gladwell (2010), “The other approach is to start with a drug candidate and then hunt for diseases that it might attack. This strategy, known as “mass screening,” doesn’t involve a theory. Instead, it involves a random search for matches between treatments and diseases” (p. 72). This seems much more in keeping with much of existing educational research, especially in my own field of educational technology. As Cuban (2001, 1986) has described, for nearly 100 years, virtually every new technology to come along has been followed by a shotgun-blast-like surge in studies aimed at finding the educational efficacy of the target of the research. This has not worked in the past and it won’t work in the future.

The Potential of Educational Design Research

4As described in the virtual pages of the Educational Designer online journal, educational designers obviously have much to gain from research, but important questions remain unanswered concerning the focus and nature of that research by, for, and about the work of educational designers (Baughman, 2008). Black (2008) presents a compelling argument for how research and formative evaluation should drive educational design from the onset of a project. In an attempt to bridge “the cultures of educational research and educational design,” Schoenfeld (2009b) recommends more research focused on the design process itself so that educational designers can ultimately sharpen their “professional vision.” In response to Schoenfeld (2009b), Black (2009) reminds us of the importance of attending to professional development opportunities for teachers who will ultimately determine the success (or lack thereof) of the innovations created by educational designers. Burkhardt (2009) suggests that educational designers should engage in an approach to research and development he describes as “strategic design” which “focuses on the design implications of the interactions of the products, and the processes for their use, with the whole user system it aims to serve.” All three of these perspectives provide valuable insight into how the relevance of educational research can be enhanced for both educational designers and teachers. Elsewhere these scholars and others have promoted research methods known variously as “design-based research” (Kelly, 2003), “development research” (van den Akker, 1999), “design experiments” (Brown, 1992; Collins, 1992; Reinking & Bradley, 2008), “formative research” (Newman, 1990), “engineering research” (Burkhardt, 2006), and educational design research (van den Akker, Gravemeijer, McKenney, & Nieveen, 2006; Kelly, Lesh, & Baek, 2008).

Arguably, educational design research is still in its infancy, but several notable researchers have applied this approach to rigorous long-term educational research and development initiatives. One outstanding example is the multi-year series of design experiments pursued by Cobb and his colleagues to enhance mathematics teaching and curricula (cf. Cobb & Gravemeijer, 2008; Cobb & Smith, 2008; Cobb, Zhao, & Dean, 2009).

I concur with the most of the recommendations made by the scholars cited above, and my intention in this paper is to strengthen the rationale for educational design research as well as to prescribe some modest directions for addressing different types of validity within the context of educational design research. These ideas draw from concerns about the impact of educational research that I have expressed for many years on the one hand and from personal experiences in developing and evaluating serious games and simulations on the other. But first, I wish to expand a bit on the nature of the rigor – relevance debate by focusing on educational technology research, the area in which I have worked for more than 35 years.

What Can We Expect from Research Emphasizing Rigor

5Charles Desforges (2001), former Director of the Teaching and Learning Research Programme of the Economic and Social Research Council in the United Kingdom, cautioned against an overemphasis on rigor to the detriment of relevance: “The status of research deemed educational would have to be judged, first in terms of its disciplined quality and secondly in terms of its impact. Poor discipline is no discipline. And excellent research without impact is not educational” (p. 2). Unfortunately, it is exceedingly difficult to detect the impact of educational research (Bransford, Stipek, Vye, Gomez, & Lam, 2009; Broekkamp & van Hout-Wolters, 2007; Kaestle, 1993; Lagemann, 2000). Although the academic journals devoted to educational research have been replete with reports of studies that arguably have had sufficient scientific rigor to pass peer review and be published for decades, this ever expanding body of literature clearly lacks substantial evidence of impact, especially in terms of what teachers and their students do day in and day out (Vanderlinde & van Braak, 2010).

Certainly, impact is exceedingly difficult to find in traditional indicators of educational achievement. Consider educational achievement in the United States of America. Most reputable sources indicate that American K-12 students have been under-achieving for several decades (Darling-Hammond, 2010; Gaston, Anderson, Su, & Theoharis, 2009; Ravitch, 2010). For example, whereas 50 years ago, the USA led the world in high school graduation rates, today many countries around the globe exceed the USA’s disappointing national average of 68%. The Trends in International Mathematics and Science Study (TIMSS) results also highlight an unacceptable degree of underachievement in U.S. schools (Howie & Plomp, 2006).

A critical question must be asked. Why during several decades of declining or stagnant educational attainment in the USA and arguably elsewhere (cf. Coady, 2000; Hussey & Smith, 2009), have publications related to educational research increased so dramatically? Several years ago, Ulrich’s Periodicals Directory, the definitive reference for bibliographic information about scholarly publications, listed 1,226 active, refereed scholarly journals related to education (Togia & Tsigilis, 2006), and there are no doubt more publication outlets today with the explosion in Web-based journals. Each year, an increasing number of educational research journals are filled with “refereed” articles and yet key indicators of student learning have failed to improve and in some cases actually declined. Most published research papers are not ever cited by any other researcher. According to Jacsó (2009), only 40% of the articles in the top-rated science and social science journals were cited by other researchers in the five-year period after their publication.

Perhaps, it might be argued, it is too much to expect for any single educational research paper to have substantial impact on practice. Fair enough. So what kinds of guidance do syntheses of numerous individual research publications provide educational designers, teachers, and other practitioners?

Meta-analysis is a procedure for examining the combined effects of numerous individual experimental studies by computing a standardized “effect size” (Glass, 1978). Although controversial, it has become one of the most widely used methods of synthesizing quantitative research results in the social sciences as well as in medical research (Cooper, Hedges, Valentine, 2009; Leandro, 2005). Hattie (2009) synthesized 800 meta-analyses related to achievement in K-12 education. Hattie argues that an effect size below 0.40 is unacceptable for any educational program purported to be innovative. Among the 138 educational treatments examined in Hattie’s study, the most effective ones are the building blocks of any robust educational design such as formative evaluation to teachers (0.90 effect size), teacher clarity (0.77), and feedback to students (0.73) whereas some of the least effective are among the favorites of researchers and developers in the field of educational technology such as computer-assisted instruction (0.37), simulations and games (0.33), and web-based learning (0.18). Although the findings of some meta-analyses merely seem to state the obvious (e.g., prior achievement predicts future achievement), the findings can be more subtle. Hattie describes his insight into the seemingly obvious variable of feedback:

…I discovered that feedback was most powerful when it was from the student to the teacher [rather than from the teacher to the student as commonly viewed]… When teachers seek, or at least are open to, feedback from students as to what students know, what they understand, where they make errors, when they have misconceptions, when they are not engaged – then teaching and learning can be synchronized and powerful. Feedback to teachers helps make learning visible. (p. 173)

Why have meta-analyses confirmed and clarified some of the basic building blocks of sound educational design, but failed to support specific educational technologies? Within the field of educational technology, traditional experimental research methods that have emphasized rigor over relevance have yielded a disappointing picture that is best summed up by the term “no significant differences” (Russell, 2001). For example, exhaustive meta-analyses of the quasi-experimental research studies focused on distance education, hypermedia, and online learning report effect sizes that are very low, essentially amounting to no significant differences (Bernard, Abrami, Lou, Borokhovski, Wade, Wozney, Wallet, Fiset, & Huang, 2004; Dillon & Gabbard, 1998; Fabos & Young, 1999). The types of media comparison studies synthesized in such meta-analyses have a long history (Clark, 1983). For example, Saettler (1990) reported that experimental comparisons of educational films with classroom instruction began in the 1920s with no significant differences found, and similar research designs have been applied to every new educational technology since.

These studies fail because they confound educational delivery modes with pedagogical methods. One of the hottest new educational technologies is the Apple iPad. The Apple website (http://www.apple.com/education/ipad/) boasts: “iPad apps are expanding the learning experience both inside and outside the classroom. From interactive lessons to study aids to productivity tools, there’s something for everyone. With iPad, the classroom is always at your fingertips.” Very soon, studies that compare Apple iPad learning with classroom instruction will inevitably appear, as they already have with devices such as the iPhone (Hu & Huang, 2010) and the iPod (Kemp, Myers, Campbell, & Pratt, 2010). And so will yet more findings of “no significant differences,” because most such studies focus on the wrong variables (instructional delivery modes) rather than on meaningful pedagogical dimensions (e.g., alignment of objectives with assessment, pedagogical design factors, time-on-task, learner engagement, and feedback).

What Can We Expect from Research Emphasizing Relevance

6A fundamental requirement for educational research emphasizing relevance is a clear understanding of what educational designers, teachers, and other stakeholders consider to be relevant with respect to teaching, learning, and outcomes (Black, 2008; Vanderlinde & van Braak, 2010). For example, one of the primary concerns of school administrators, teachers, and parents has long been Academic Learning Time (ALT) defined as the amount of time students are actively engaged in meaningful learning per school day (Yair, 2000). Although numerous proposals have been made for extending the school day or the school year, what people ultimately care about is increasing ALT. For example, Berliner (1991) wrote: “Thus, for most people, ALT is not only an instructional time variable to be used in research, evaluation, auditing, and classroom consultation, it is a measure of that elusive characteristic we call "quality of instruction" (p. 31).

Many studies have found that the average ALT per student in a seven hour public school day in the USA is unacceptably low (Berliner, 1991; Yair, 2000). Thus, it would seem to be a reasonable assumption that any educational design that can increase ALT will increase achievement. This turns out to be much more challenging than it sounds. Educational designers and teachers alike constantly struggle to find ways to increase ALT (Clark & Linn, 2003).

Nonetheless, research that properly emphasizes relevance should focus on time as one of the most important variables of concern for teachers and students. Nearly 80 years ago, Goldberg and Pressey (1928) reported that the average 6th grader spent seven hours a day in school and just under two and half hours per day in what they labeled “amusements,” defined as “playing, motion picture shows, reading, and riding” (p. 274). Although time for school has largely remained static, time for “amusements” appears to have tripled since then. In 2010, the Kaiser Family Foundation (Rideout, Foehr, & Roberts, 2010) reported that:

Eleven- to fourteen-year-olds average just under nine hours of media use a day (8:40), and when multitasking is taken into account, pack in nearly 12 hours of media exposure (11:53). The biggest increases are in TV and video game use: 11- to 14-year-olds consume an average of five hours a day (5:03) of TV and movie content—live, recorded, on DVD, online, or on mobile platforms—and spend nearly an hour and a half a day (1:25) playing video games. (p. 5)

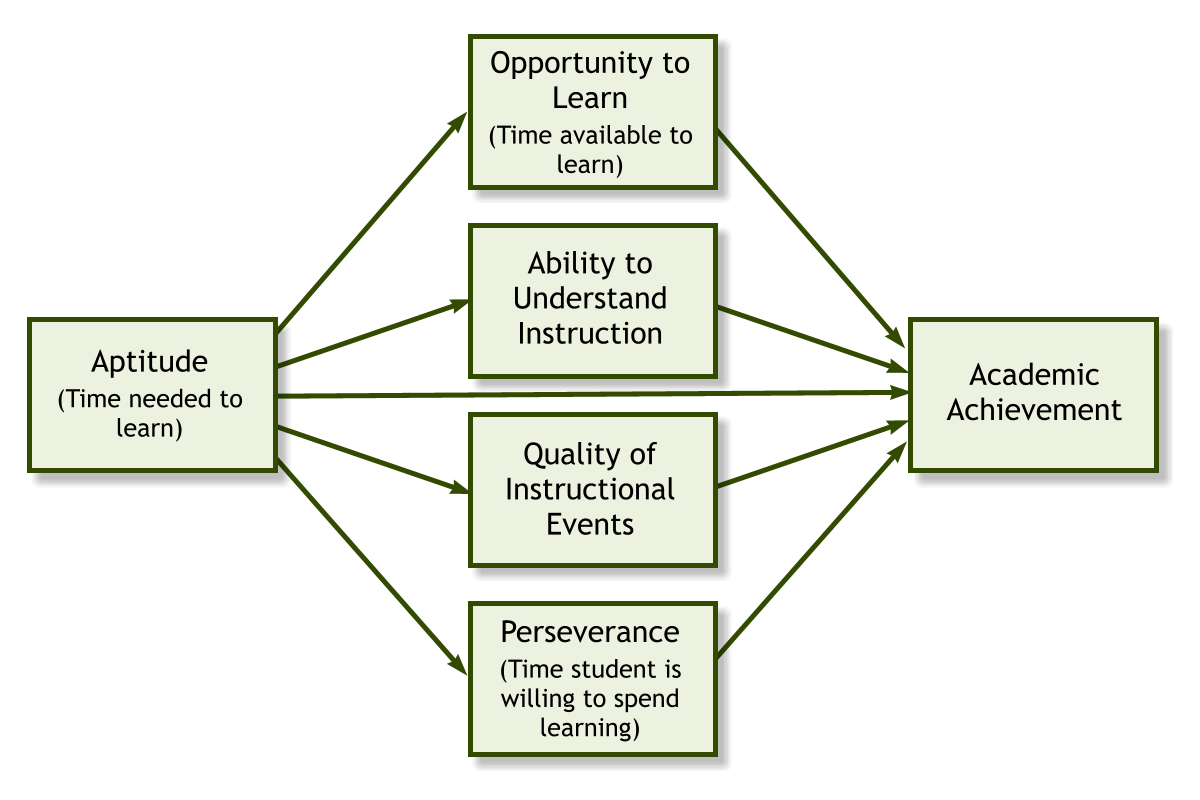

The dramatic increase in time for amusements that young people in the USA enjoy suggests that the use of time in school is more important than ever. Carroll (1963) pointed educational researchers to the importance of classroom time nearly fifty years ago. Carroll’s model (see Figure 1) is a quasi-mathematical one in which three of the five classes of variables that explain variance in school achievement are expressed in terms of time. In a 25-year retrospective consideration of his model, Carroll (1989) stated that ‘the model could still be used to solve current problems in education’ (p. 26). I concur and recommend that educational designers and researchers reconsider his model and its emphasis on time as a critical variable in educational effectiveness (Anderson, 1985). Research and development initiatives that hope to be relevant must take time and its use by teachers and learners very seriously.

Although it is obvious that teachers worry constantly about time, another variable that should concern them, but doesn’t to a sufficient degree is curricular alignment (Martone & Sireci, 2009). Herrington, Reeves, and Oliver (2010) stress the importance of this variable in educational design:

The success of any learning design, including authentic e-learning, is determined by the degree to which there is adequate alignment among eight critical factors: 1) goals, 2) content, 3) instructional design, 4) learner tasks, 5) instructor roles, 6) student roles, 7) technological affordances, and 8) assessment. (p. 108)

Misalignment is a major reason why so many innovative educational designs fail (Squires, 2009). Teachers may have clear objectives, possess high-quality content expertise, strong pedagogical content knowledge, and even apply advanced educational designs, but may be forced to focus their assessments on what is easy to measure rather than what is important. Research and development efforts purported to be relevant will carefully consider the alignment among the critical dimensions of any learning environment (Reeves, 2006).

Yet another important issue for teachers, students, parents, and the public at large is the kinds of outcomes that are pursued in education. Student learning outcomes in K-12 education are traditionally defined in relationship to three primary domains: cognitive, affective, and psychomotor. The cognitive domain focuses on the capacity to think or one’s mental skills. Originally defined by Bloom, Engelhart, Furst, Hill, and Krathwohl (1956), Anderson, Krathwohl, Airasian, Cruikshank, Mayer, Pintrich, Raths, and Wittrock (2001) revised the cognitive domain with six levels: 1) remembering, 2) understanding, 3) applying, 4) analyzing, 5) evaluating, and 6) creating. The affective domain (Krathwohl, Bloom, & Masia, 1964) focuses on emotions and feelings, especially in relationship to a set of values. It ranges from receiving or becoming aware of stimuli that evoke feelings to manifesting behavior characterized by a set of consistent and predictable values. The psychomotor domain (Harrow, 1972) is concerned with the mastery of physical skills ranging from reflective movement to exhibiting appropriate body language in non-discursive communication.

None of these domains are sufficiently internally consistent, much less aligned with each other, but despite their flaws, they can help educational designers consider a fuller range of learning objectives than otherwise might be included. Most curricular innovations target the cognitive domain rather than the affective or psychomotor, and within the cognitive domain much more attention is often paid to the lower half of the domain (remembering, understanding, and applying) than it is to the arguably more important upper half (analyzing, evaluating, and creating). This problem likely stems from the degree to which the skills in the lower half are tested in schools (Kohn, 2000; Nichols & Berliner, 2007).

Alarmingly, an entire domain is all too often ignored by teachers, educational designers, and educational researchers. Whereas the cognitive domain is concerned with thinking, the affective with valuing, and the psychomotor with skilled behavior, the neglected conative domain (Snow, Corno, & Jackson, 1996) is associated with action. The neglect of the conative domain in early education has serious detrimental results throughout a lifetime. Gawande (2007) describes how healthcare practitioners may possess the cognitive capacity, affective values, and physical skills to perform a given task (e.g., cleansing hands thoroughly before interacting with a patient in a hospital), but whether that physician, nurse, or other healthcare practitioner possesses the will, desire, drive, level of effort, mental energy, intention, striving, and self-determination to actually perform the task according to the highest standards possible remains dubious in far too many cases (Duggan, Hensley, Khuder, Papadimos, & Jacobs, 2008).

The conative domain focuses on conation or the act of striving to perform at the highest levels. Despite the obvious importance of this type of volitional learning outcome in many disciplines, the literature on educational design appears to be insufficiently informed by consideration of the conative domain. The roots of the construct of conation can be traced all the way back to Aristotle who used the Greek word ‘orexis’ to signify striving, desire, or the conative state of mind. Kolbe (1990) contrasted the cognitive, affective, and conative domains as illustrated in Figure 2. Research intended to be relevant to the needs of learners and society at large should address the full array of learning domains.

| Cognitive | Affective | Conative |

|---|---|---|

|

|

|

The pendulum between rigor and relevance may be moving toward the kind of balance recommended by Desforges (2001). To heighten the relevance of research that would have demonstrable impact of the kind and level heretofore missing in education, a refocusing of research and educational designs on the fundamental concerns of practitioners is necessary (Bransford et al., 2009). Academic learning time, alignment, and a full spectrum of learning domains (cognitive, affective, psychomotor, and conative) would be excellent starting points. But admittedly, it is often difficult to find funding for research and development related to such basic variables. It is much easier to find support for research and development related to new technologies. For example, the following funding priorities appeared in a request for proposals from the National Science Foundation in the USA in November 2010 under the heading “Teaching and Learning Applications.”

Computer and communication technologies have profoundly altered many aspects of our lives and seem to hold great promise for improving education as well. But technology is only a tool. Fulfilling its promise for education will require innovative education applications and tools that can be used for teaching and learning in traditional and non-traditional settings, such as video game-based applications, which not only have the potential to deliver science and math education to millions of simultaneous users, but also significantly affect learning outcomes in the STEM disciplines. The rapid and widespread use of computers, mobile phones, other personal electronic appliances and multi-player Internet games have provided users with engaging new interfaces and forms of immersion in both virtual and augmented realities.

Could it be possible to address both the interests of funding agencies in advancing the development of new technologies and the aforementioned need to address the fundamental concerns of teachers, learners, parents, and society such as time, alignment, and multiple learning domains? Educational design research (Kelly et al., 2008; van den Akker et al., 2006) is the key, a research approach that also strikes an appropriate balance between rigor and relevance.

Serious Games and Educational Design Research

7Foremost among the digital instructional modalities attracting enormous attention and substantial funding today are so-called “serious games” (Federation of American Scientists, 2006; Ritterfeld, Cody, & Vorderer, 2009). Some have suggested that games are the ideal vehicle for increasing learning time in and out of school (Prensky, 2006; Shaffer, 2006). Writing in the journal Science, Mayo (2009) wrote that “Finally, with all else being equal, games invite more time on task. Teenagers commonly spend 5 to 8 hours per week playing games, and this equals or surpasses the time spent on homework each week” (p. 80).

The problem with the interest in educational games is that while the amounts of time young students spend with games and other mediated diversions has increased dramatically, only small fractions of the time young people spend playing games afford them opportunities to learn academically relevant knowledge and skills (Gee, 2007). Ever since the development of serious games and simulations for learning began more than 40 years ago (Abt, 1970; Boocock & Schild, 1968), educational researchers have sought to compare the effects of game playing on learning in comparison with traditional instruction (cf. Van Sickle, 1986). Until recently, the results have not been impressive. Based on a meta-analysis of 361 studies, Hattie (2009) reported an effect size of only 0.33 for interactive games and simulations, a difference that falls below the meaningful threshold of 0.40.

More promising results are beginning to be found as a result of large-scale educational design research initiatives such as Quest Atlantis (http://atlantis.crlt.indiana.edu/) (Barab, Gresalfi, & Ingram-Goble, 2010), River City (http://muve.gse.harvard.edu/rivercityproject/) (Dede, 2009a), and Whyville (http://www.whyville.net/) (Neulight, Kafai, Kao, Foley, & Galas, 2007), three curricular innovations that are designed to enhance science education through student engagement with online learning game environments. A foundational design principle of all three of these interactive learning environments is that immersion in a virtual world enables the design and implementation of “educational experiences that build on students’ digital fluency to promote engagement, learning, and transfer from classroom to real-world settings” (Dede, 2009b, p. 66). However, although the educational effectiveness of these immersive learning environments has been supported by extensive educational design research, they are as yet being used in only a tiny fraction of schools. Owston (2009) reminds designers and researchers of critical importance of professional learning opportunities for teachers whenever new technologies are introduced into schools.

My own work with learning games and simulations is largely related to training for physicians, emergency medical providers, and other healthcare personnel, and my particular role in the educational design of medical learning games and simulations focuses on evaluation and validation. Technological advances such as virtual reality and haptic interfaces are extending the application of serious games into virtually all sectors of healthcare education and training (John, 2007). However, there has not been sufficient attention to validating serious games in these areas and some researchers remain skeptical about their effectiveness. For example, in the preface to their edited book titled Computers Games and Team and Individual Learning, O’Neil and Perez (2008) stated:

While effectiveness of game environments can be documented in terms of intensity and longevity of engagement (participants voting with their quarters or time), as well as the commercial success of games, there is much less solid empirical information about what learning outcomes are systematically achieved by the use of individual and multiplayer games to train adult participants in acquiring knowledge and skills. (p. ix)

Traditional approaches to researching the effectiveness of serious games and simulations for learning focus on comparing games and simulations with other modes of instruction (Coller & Scott, 2009; Vogel, Vogel, Cannon-Bowers, Bowers, Muse, & Wright, 2006). As noted above, a major weakness of traditional experiments comparing different instructional modes is that they often yield no significant differences (Russell, 2001). Educational design research differs from the traditional experimental approach in that the latter simply informs designers and researchers about which instructional mode (e.g., classroom instruction vs. immersive game environment) led to greater outcomes, whereas educational design research methods seek to define differential outcomes explained by variance in the design features of different modes. Educational design researchers investigate design features, not just alternative instructional modes, allowing them to identify which design feature is more effective than another with respect to a specific outcome, and why.

Educational designers of curricular innovations recognize that it is important to keep in mind the role of the innovation within an overall curriculum. Learning is accomplished through a complex array of formal and informal activities, experiences, and interactions. The processes involved in learning are too complex to definitely identify the contribution of any one kind of activity, experience, or interaction, and thus there is always a probabilistic aspect to any assertions about the efficacy of a particular educational innovation. As Cronbach (1975) famously wrote about educational treatments: “when we give proper weight to local conditions, any generalization is a working hypothesis, not a conclusion” (p. 125).

Keeping the complexity of learning in mind, the role of validation studies is important with respect to establishing the credibility of a curricular innovation within a specific context of use and for a particular set of decision-makers. In the context of learning games, for example, Baker and Delacruz (2008) state that “validity inferences depend upon the use to which the findings will be put, with uses including classifying the learner (or group), determining rank order, making selections, determining next instructional sequences, and so on” (p. 23).

With this complexity in mind, there are six different types of validities that educational designers and researchers collaborating to develop serious learning games and simulations must seek to establish:

- Face Validity: On the face of it, does the game or simulation seem to be a credible representation of the domain(s) of interest?

- Content Validity: Does the game or simulation encompass the appropriate content and breadth of content?

- Learning Validity: To what extent does the game or simulation afford sufficient opportunity and support for learning?

- Curriculum Validity: To what extent is the game or simulation appropriately aligned with other curricular components?

- Construct Validity: To what extent does performance in a game or simulation distinguish novices from experts? Less experienced students from more experienced?

- Predictive (Concurrent) Validity: To what extent does performance in a game or simulation transfer to performance in the real world?

Focusing on different types of validities is just one way that educational designers and researchers can collaborate in educational design research to advance the state-of-the-art of serious games for learning and other curricular innovations. Multiple data collection methods and exemplars remain to be developed and/or refined, but the pursuit of these hold great promise for striking a desirable balance between rigor and relevance in educational research.

Conclusion

8Rigor versus relevance will likely remain an ongoing debate among many social scientists. Ultimately, it is an issue that each individual educational researcher must confront and resolve. As Schön (1995) wrote:

In the swampy lowlands, problems are messy and confusing and incapable of technical solution. The irony of this situation is that the problems of the high ground tend to be relatively unimportant to individuals or to the society at large, however great their technical interest may be, while in the swamp lie the greatest problems of human concern. The practitioner [researcher] is confronted with a choice. Shall he remain on the high ground where he can solve relatively unimportant problems according to his standards of rigor, or shall he descend to the swamp of important problems where he cannot be rigorous in any way he knows how to describe? (p. 28)

How an individual resolves this dilemma is inevitably influenced by the kind and level of support available for one direction or another. In an effort to enhance the quality, value, and impact of educational research in the USA, the pendulum of federal support for educational research swung toward the rigor side during the first eight years of this decade, and now there is some evidence that it may be swinging toward the relevance side, although the jury is still out on this. But what is really needed is a balance between rigor and relevance.

Returning to the example of cancer research, Gladwell (2010) also described the work of Dr. Safi Bahcall, who holds a Ph.D. in theoretical physics, but has dedicated his career to the search for effective cancer drugs. According to Gladwell, Bahcall maintains that “In physics, failure was disappointing. In drug development, failure was heartbreaking” (p. 69) because he and his colleagues are seeking to save the lives of the patients involved in their clinical trials. The continued failure of educational researchers to have meaningful impact on real world educational problems is also “heartbreaking.” To end this deplorable situation, new approaches are needed and educational design research is an approach with enormous promise that may strike an optimal balance between the rigor educational researchers seek and the relevance that researchers and practitioners alike deserve. Let us in the Educational Designer community seriously take up the challenge of large scale educational design research focused on the important educational problems of our time.

References

9Berliner, D. C. (2002). Educational research: The hardest science of all. Educational Researcher, 31(8), 18-20.

Black, P. (2009). In Response To: Alan Schoenfeld. Educational Designer, 3(1). Retrieved from http://www.educationaldesigner.org/ed/volume1/issue3/article12/index.htm

Black, P. (2008). Strategic decisions: Ambitions, feasibility and context. Educational Designer, 1(1). Retrieved from http://www.educationaldesigner.org/ed/volume1/issue1/article1

Burkhardt, H. (2009). On strategic design. Educational Designer, 3(1). Retrieved from http://www.educationaldesigner.org/ed/volume1/issue3/article9/

Clark, R. E. (1983). Reconsidering research on learning with media. Review of Educational Research, 53(4), pp. 445-459.

Cobb, P., & Gravemeijer, K. (2008). Experimenting to support and understand learning processes. In A. E. Kelly, R. A. Lesh, & J. Y. Baek (Eds.), Handbook of design research methods in education: Innovations in science, technology, engineering, and mathematics learning and teaching (pp. 68-95). New York: Routledge.

Cobb, P., & Smith, T. (2008). The challenge of scale: Designing schools and districts as learning organizations for instructional improvement in mathematics. In K. Krainer, & T. Wood (Eds.), International handbook of mathematics teacher education: Vol. 3. Participants in mathematics teacher education: Individuals, teams, communities and networks (pp. 231-254). Rotterdam, The Netherlands: Sense.

Dede, C. (2009a). Learning context: Gaming, simulations, and science learning in the classroom. White paper commissioned by the National Research Council. Retrieved from http://www7.nationalacademies.org/bose/Dede_Gaming_CommissionedPaper.pdf

Desforges, C. (2001, August). Familiar challenges and new approaches: Necessary advances in theory and methods in research on teaching and learning. The Desmond Nuttall/Carfax Memorial Lecture, British Educational Research Association (BERA) Annual Conference, Cardiff. Retrieved from http://www.tlrp.org/acadpub/Desforges2000a.pdf

Federation of American Scientists. (2006). Summit on educational games: Harnessing the power of video games for learning. Retrieved from http://fas.org/gamesummit/

Rideout, V. J., Foehr, U. G., and Roberts, D. F. (2010). Generation M2: Media in the lives of 8- to 18-year-olds. Kaiser Family Foundation. Retrieved from http://kff.org/entmedia/upload/8010.pdf

Schoenfeld, A. H. (2009b). Bridging the cultures of educational research and design. Educational Designer, 1(2). Retrieved from http://www.educationaldesigner.org/ed/volume1/issue2/article5

Shavelson, J. & Towne, L. (Eds.). (2002). Scientific research in education. Washington, DC: National Academy Press.

Skehan, J. W., & Nelson, C. (Eds.). (2000) Creation controversy and the science classroom. Arlington, VA: National Science Teachers Association. Retrieved from http://www.nsta.org/pdfs/store/pb069x2web.pdf

About the Author

10Since earning his Ph.D. at Syracuse University, Thomas C. Reeves, Professor Emeritus of Learning, Design, and Technology at The University of Georgia (UGA), has developed and evaluated numerous interactive learning programs for education and training. In addition to many presentations and workshops in the USA, he has been an invited speaker in other countries including Australia, Belgium, Brazil, Bulgaria, Canada, China, England, Finland, Italy, Malaysia, The Netherlands, New Zealand, Peru, Portugal, Russia, Singapore, South Africa, South Korea, Spain, Sweden, Switzerland, Taiwan, Tunisia, and Turkey. He is a former Fulbright Lecturer, and a former editor of the Journal of Interactive Learning Research. His research interests include: evaluation of educational technology, authentic learning environments, and instructional technology in developing countries. Professor Reeves is the co-founder of the Learning and Performance Support Laboratory (LPSL) at UGA. He retired from UGA in 2010, but remains active there as a Professor Emeritus. In 2003, he became the first AACE Fellow, and in 2010, he was made an ASCILITE Fellow. He lives in Athens, Georgia with his wife, Dr. Trisha Reeves, and their two dogs, Flyer and Spencer.